Mobile’s next big thing is unraveling, it’s going to get messy, and it may already be too late to stop it

As we head into 2026, mobile companies have a serious problem.

What we’ve been told is the next big thing in smartphones, AI, has a growing, almost entirely negative public reputation, and it’s not showing any signs of changing.

Because it’s all unraveling, smartphone makers need to quickly give us AI features that are valuable and better explain why we should use the ones we have to avoid a messy backlash, which threatens to cancel out the good things possible with AI today.

AI has no purpose

At least, for consumers

I was prompted to write this after watching a video posted to the YouTube channel Upper Echelon, titled “Total stagnation: The AI ‘nothing’ services.”

The thesis is that current Large Language Models (LLMs) have no purpose to consumers, are solutions looking for problems, and will fail because of it.

Many of the opinions voiced are valid, but what caught my attention most was the following quote:

The list of examples shoehorning AI into every conceivable industry or product purely because of misguided AI hype could almost go on forever.

Anyone remotely interested in tech will likely completely agree with this statement. I know I do.

The video goes on to mention products like the Rabbit R1 and the Humane AI Pin as examples of bad AI products, but does admit smart glasses may suit AI well in the future, with the caveat:

The lion’s share of these products right now are trying to replace the smartphone by doing things worse, slower, and with a higher rate of errors compared to what apps already do, and what a human user is easily capable of.

The trouble is, it’s hard to argue with this. The comments are mostly in agreement, with only very few speaking out about how LLMs can be useful.

Upper Echelon is a tech-adjacent channel, and because AI features on our phones, outside LLMs, are largely pretty useless, the mostly negative opinion is unlikely to be out of step with the growing mainstream audience being exposed to AI.

But it’s only the start of AI’s reputational problems.

The AI bubble

Whether it bursts or not doesn’t matter

In November, the “AI bubble” was big news almost everywhere, with opinions on whether it exists and if it does, if or when it will burst.

What makes this fascinating is a large percentage of the coverage came from outside the tech space.

An excellent example is Coffeezilla’s video, titled “We are not Enron.” Coffeezilla mostly talks about cryptocurrency, but this video took a look at how Nvidia and OpenAI are believed to be feeding a circular AI economy.

It’s fascinating and very accessible to those outside tech. It also paints a bleak picture of what’s happening.

Host Coffeezilla had so much to say on the subject, he made another video titled “The state of the AI bubble” on his second channel, and again, it wasn’t particularly positive.

Combined, these two videos have more than three million views.

For those interested in a more in-depth, data-driven look at the AI circular economy, Hank Green — best known for his science videos, and Google’s best app of the year, Focus Friend — made a video titled “The state of the AI industry is freaking me out.”

It has 3.2 million views, and doesn’t do much to convince anyone we’re not in the middle of an AI bubble.

AI becomes politicized

And a lot of people are paying attention

This kind of negative attention goes far beyond the environmental concerns surrounding AI, which many find easy to ignore.

It’s about money.

And combined with AI beginning to replace jobs, it affects people’s bottom line and, unless you’re Sam Altman, Jensen Huang, Mark Zuckerberg, or one of a few other billionaires, it’s not in a good way.

It’s certain to make people sit up and take notice of AI, evidenced by another video, this time by Canadian broadcaster CBC News.

The host simplified all the AI bubble news into a measured 10-minute video titled “If the AI bubble pops, will the whole U.S. economy with it?”

For the video to try to answer this question, it means people are asking it. Almost 500,000 people have watched it to see if it was answered.

AI continues to become more politicized, too.

Just take a look at this video from US Senator Bernie Sanders, which, on the surface, may seem like scaremongering, but it contains very real concerns likely in the minds of regular people. Nearly 600,000 of them have watched it.

Social stigma increases

The end of humanity

Social stigma is also gradually being attached to AI, as tabloids grab stories about people falling in love with AI chatbots. These lurid accounts aren’t positive.

Not only do they raise more questions about AI’s usefulness and its place in society, but they also cancel the potentially helpful side of AI in the treatment of mental health issues and loneliness.

Those using it are turned into a laughingstock.

Beyond all this real-world AI press, the AI 2027 scenario, written by a group of AI researchers in which AI takes over the world and wipes out the human race, gained a huge amount of attention.

There is, as you’d expect, absolutely nothing positive in the outcome.

Doom-laden predictions like this always capture the public’s attention and further paint AI as an unfavorable development no one wants.

What was once something only visible to tech enthusiasts, new and highly engaged smartphone buyers, and software engineers is now very mainstream.

Unfortunately, it’s confusing, concerning, and unsettling coverage, and it’s inevitably going to shape an ever more negative opinion and ultimately put people off AI.

What does it mean for mobile?

It’s people’s first exposure to consumer AI

Let’s bring this back to mobile and what it means for 2026.

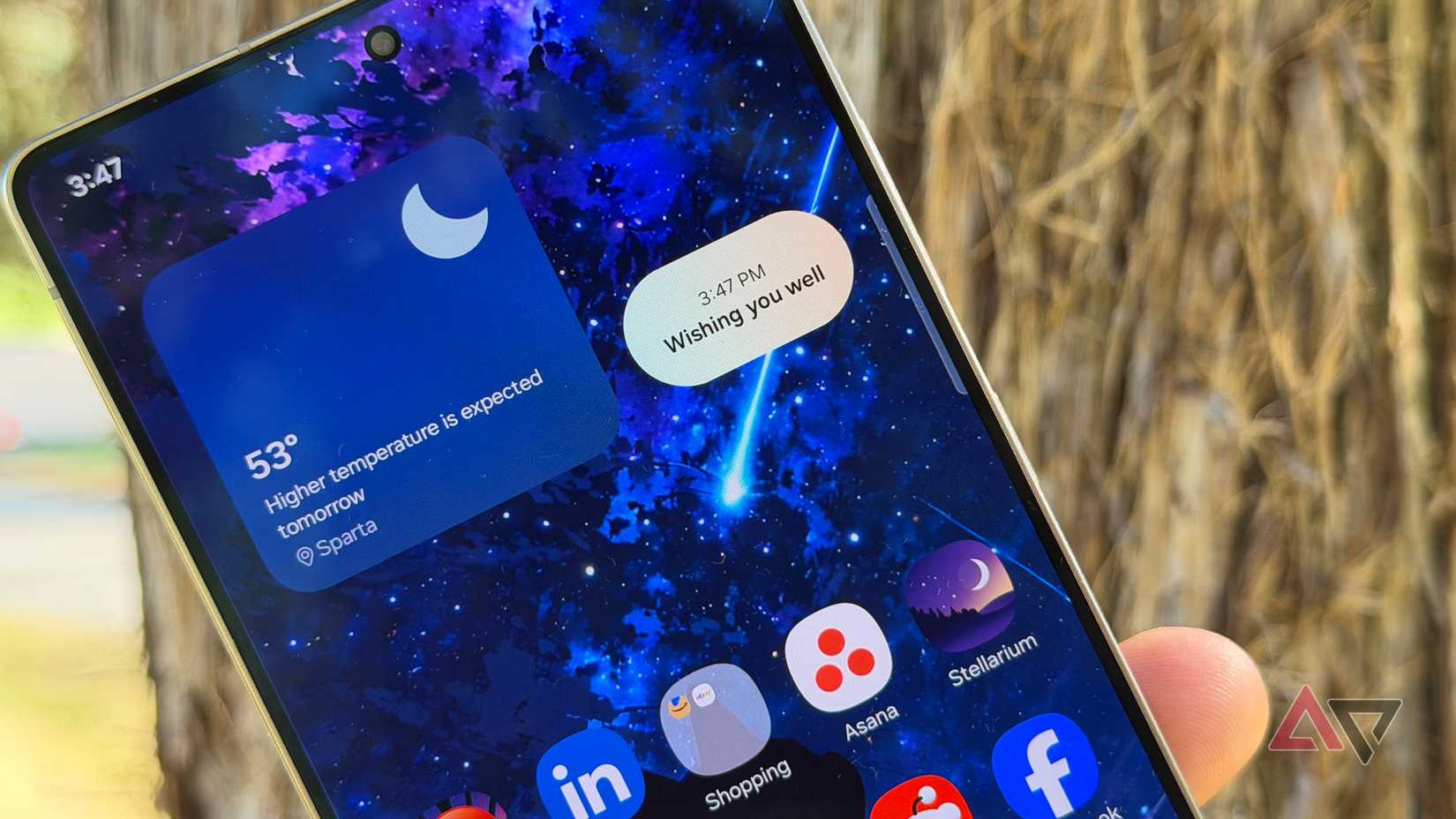

Samsung’s Galaxy AI suite of barely useful tools suddenly looks a bit pathetic next to the huge implications being discussed elsewhere at the moment.

However, it, along with other brands’ efforts, is also likely to be regular people’s first exposure to consumer-level AI.

That’s the same people watching, listening, and getting concerned about the direction AI is taking, and who may think about buying a Galaxy S26.

Galaxy AI has been Samsung’s primary selling point for several generations now. If it’s going to be the same for the S26 series, it needs to either become more interesting and useful or be sold in a very different way if it’s going to hold any positive appeal.

It’s not just Samsung either. The Moto AI tools I tried on the Edge 70 were just as forgettable, just like OnePlus’s Plus Key and its Mindspace feature, which is almost identical to Nothing’s Essential Space.

I struggle to find a use for either.

Apple has been fairly quiet about Apple Intelligence in 2025, and for good reason. It’s rubbish.

Google’s all-in on AI, to the point where the Pixel 10’s specs were entirely overshadowed at launch.

When mostly useless AI features were shoved in front of regular people who didn’t have any opinion about AI at all, it didn’t matter. They didn’t use them and didn’t care.

Now, those same features are being put in front of people whose opinion about AI is being rapidly and actively shaped into one that promotes caution, or worse, negativity.

Bad press and bad features are never a winning combination.

Time for change

AI can be useful

Smartphone makers don’t need users to be wary of headline features.

What if people started to avoid devices with an outwardly heavy reliance on AI in advertising? What if they don’t use the AI features included at all?

If the videos about the finances behind AI talked about above have taught us anything, it’s that a lot of money has been spent on developing AI, so it has to be recouped somewhere.

Companies need to be smarter.

In 2026, they need to find those benefits that Upper Echelon’s video said don’t exist, and give us real world — not helpful to a handful of people, and not in examples set in some dream world created by marketing executives.

I mean, genuine real world — examples of how all of us can use them.

What’s frustrating is that AI is often excellent.

I regularly use Google Gemini to help with creative tasks and general search when I can’t find the right words for a normal web-based search.

AI chatbots can be a lot of fun, provided you don’t decide to marry one, and there are vibrant communities built around them.

It has its uses for coding, where even the most inexperienced would-be developer can create an app with little bother, and the much-maligned Rabbit R1 — used as an example in Upper Echelon’s video as a terrible AI product — has improved over the last year.

There are plenty of uses for AI, but they’re being sidelined as manufacturers and marketers try to “sell the solution.”

Back to basics

Good features sell phones

Nothing I mentioned is a “sexy” example of AI working well. Still, they are practical and make me think positively about the benefits of AI, as something far more than silly hardware buttons on the side of a phone that are supposed to help me remember stuff.

These are gimmicks, and combined with a growing negative opinion around AI, they’re going to very quickly cancel out what can be good about it.

Mobile AI isn’t a lost cause, but mobile companies need to react to what’s shaping public opinion around the technology today, and be smarter about the features that use it, how AI is integrated into phones, and how it’s marketed to buyers in the coming year.

AI fatigue will quickly set in, and when an audience is lost, it’s very hard to get them back. Sensible, useful, interesting features will help avoid that.

I don’t want to hear words like “summarize,” or phrases like “saves you time so you can do more of what you enjoy,” or to see any fireside chats filled with canned questions about how amazing AI is at all in 2026, either.

If mobile AI is going to stick around and not fade away like 3D screens or motorized selfie cameras, it’s not a choice, but an essential.